This is the sequel post to the previous post on hRPC; after hRPC has matured and gotten an actual spec written for it. This post contains much more information about hRPC, as well as tutorials for it.

This post is mirrored from our post on dev.to

Co-authored by: Yusdacra (yusdacra@GitHub), Bluskript (Bluskript@GitHub), Pontaoski (pontaoski@GitHub)

hRPC is a new RPC system that we, at Harmony, have been developing and using for our decentralized chat protocol. It uses Protocol Buffers (Protobufs) as a wire format, and supports streaming.

hRPC is primarily made for user-facing APIs and aims to be as simple to use as possible.

If you would like to learn more, the hRPC specification can be found here.

What is an RPC system?

If you know traditional API models like REST, then you can think of RPC as a more integrated version of that. Instead of defining requests by endpoint and method, requests are defined as methods on objects or services. With good code generation, an RPC system is often easier and safer to use for both clients and servers.

Why hRPC?

hRPC uses REST to model plain unary requests, and WebSockets to model streaming requests. As such, it should be easy to write a library for the languages that don't already support it.

hRPC features:

- Type safety

- Strict protocol conformance on both ends

- Easy streaming logic

- More elegant server and client code with interfaces/traits and endpoint generation.

- Cross-language code generation

- Smaller request sizes

- Faster request parsing

Why Not Twirp?

Twirp and hRPC have a lot in common, but the key difference that makes Twirp a dealbreaker for harmony is its lack of support for streaming RPCs. Harmony's vision was to represent all endpoints in Protobuf format, and as a result Twirp became fundamentally incompatible.

Why Not gRPC?

gRPC is the de-facto RPC system, in fact protobuf and gRPC come together a lot of the time. So the question is, why would you want to use hRPC instead?

Unfortunately, gRPC has many limitations, and most of them result from its low-level nature.

The lack of web support

At Harmony, support for web-based clients was a must, as was keeping things simple to implement. gRPC had neither. As stated by gRPC:

> It is currently impossible to implement the HTTP/2 gRPC spec in the browser, as there is simply no browser API with enough fine-grained control over the requests.

The gRPC slowloris

gRPC streams are essentially just a long-running HTTP request. Whenever data needs to be sent, it just sends a new HTTP/2 frame. The issue with this, however, is that most reverse proxies do not understand gRPC streaming. At Harmony, it was fairly common for sockets to disconnect because they are idle for long stretches of time. NGINX and other reverse proxies would see these idle connections, and would close them, causing issues to all of our clients. hRPC's use of WebSockets solves this use-case, as reverse proxies are fully capable to understand them.

In general, with hRPC we retain the bulk of gRPC's advantages while simplifying stuff massively.

Why not plain REST?

Protobuf provides a more compact binary format for requests than JSON. It lets the user to define a schema for their messages and RPCs which results in easy server and client code generation. Protobuf also has features that are very useful for these kind of schemas (such as extensions), and as such is a nice fit for hRPC.

A Simple Chat Example

Let's try out hRPC with a basic chat example. This is a simple system that supports posting chat messages which are then streamed back to all clients. Here is the protocol:

syntax = "proto3";

package chat;

// Empty object which is used in place of nothing

message Empty { }

// Object that represents a chat message

message Message { string content = 1; }

service Chat {

// Endpoint to send a chat message

rpc SendMessage(Message) returns (Empty);

// Endpoint to stream chat messages

rpc StreamMessages(Empty) returns (stream Message);

}

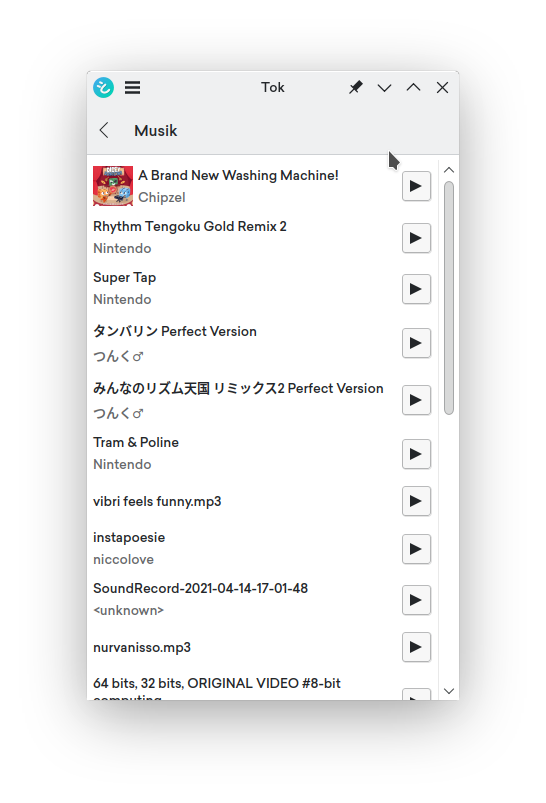

By the end, this is what we will have:

Getting Started

NOTE: If you don't want to follow along, you can find the full server example at hRPC examples repository.

Let's start by writing a server that implements this. We will use hrpc-rs, which is a Rust implementation of hRPC.

Note: If you don't have Rust installed, you can install it from the rustup website.

We get started with creating our project with cargo new chat-example --bin.

Now we will need to add a few dependencies to Cargo.toml:

[build-dependencies]

# `hrpc-build` will handle generating Protobuf code for us

# The features we enable here matches the ones we enable for `hrpc`

hrpc-build = { version = "0.29", features = ["server", "recommended"] }

[dependencies]

# `prost` provides us with protobuf decoding and encoding

prost = "0.9"

# `hrpc` is the `hrpc-rs` main crate!

# Enable hrpc's server features, and the recommended transport

hrpc = { version = "0.29", features = ["server", "recommended"] }

# `tokio` is the async runtime we use

# Enable tokio's macros so we can mark our main function, and enable multi

# threaded runtime

tokio = { version = "1", features = ["rt", "rt-multi-thread", "macros"] }

# `tower-http` is a collection of HTTP related middleware

tower-http = { version = "0.1", features = ["cors"] }

# Logging utilities

# `tracing` gives us the ability to log from anywhere we want

tracing = "0.1"

# `tracing-subscriber` gives us a terminal logger

tracing-subscriber = "0.3"

Don't forget to check if your project compiles with cargo check!

Building the Protobufs

Now, let's get basic protobuf code generation working.

First, go ahead and copy the chat protocol from earlier into src/chat.proto.

After that we will need a build script. Make a file called build.rs in the root of the project:

// build.rs

fn main() {

// The path here is the path to our protocol file

// which we copied in the previous step!

//

// This will generate Rust code for our protobuf definitions.

hrpc_build::compile_protos("src/chat.proto")

.expect("could not compile the proto");

}

And lastly, we need to import the generated code:

// src/main.rs

// Our chat package generated code

pub mod chat {

// This imports all the generated code for you

hrpc::include_proto!("chat");

}

// This is empty for now!

fn main() { }

Now you can run cargo check to see if it compiles!

Implementing the Protocol

In this section, we will implement the protocol endpoints.

First, get started by importing the stuff we will need:

// src/main.rs

// top of the file

// Import everything from chat package, and the generated

// server trait

use chat::{*, chat_server::*};

// Import the server prelude, which contains

// often used code that is used to develop servers.

use hrpc::server::prelude::*;

Now, let's define the business logic for the Chat server. This is a simple example, so we can just use channels from tokio::sync::broadcast. This will allow us to broadcast our chat messages to all clients connected.

// ... other `use` statements

// The channel we will use to broadcast our chat messages

use tokio::sync::broadcast;

Afterwards we can define our service state:

pub struct ChatService {

// The sender half of our broadcast channel.

//

// We will use it's `.subscribe()` method to get a

// receiver when a client connects.

message_broadcast: broadcast::Sender<Message>,

}

Then we define a simple constructor:

impl ChatService {

// Creates a new `ChatService`

fn new() -> Self {

// Create a broadcast channel with a maximum 100

// amount of items that can be pending. This

// doesn't matter in our case, so the number is

// arbitrary.

let (tx, _) = broadcast::channel(100);

Self {

message_broadcast: tx,

}

}

}

Now we need to implement the generated trait for our service:

impl Chat for ChatService {

// This corresponds to the SendMessage endpoint

//

// `handler` is a Rust macro that is used to transform

// an `async fn` into a properly typed hRPC trait method.

#[handler]

async fn send_message(&self, request: Request<Message>) -> ServerResult<Response<Empty>> {

// we will add this in a bit

}

// This corresponds to the StreamMessages endpoint

#[handler]

async fn stream_messages(

&self,

// We don't use the request here, so we can just ignore it.

// The leading `_` stops Rust from complaining about unused

// variables!

_request: Request<()>,

socket: Socket<Message, Empty>,

) -> ServerResult<()> {

// we will add this in a bit

}

}

And now for the actual logic, let's start with message sending:

#[handler]

async fn send_message(&self, request: Request<Message>) -> ServerResult<Response<Empty>> {

// Extract the chat message from the request

let message = request.into_message().await?;

// Try to broadcast the chat message across the channel

// if it fails return an error

if self.message_broadcast.send(message).is_err() {

return Err(HrpcError::new_internal_server_error("couldn't broadcast message"));

}

// Log the message we just got

tracing::info!("got message: {}", message.content);

Ok((Empty {}).into_response())

}

Streaming logic is simple. Simply subscribe to the broadcast channel, and then read messages from that channel forever until there's an error:

#[handler]

async fn stream_messages(

&self,

_request: Request<()>,

socket: Socket<Message, Empty>,

) -> ServerResult<()> {

// Subscribe to the message broadcaster

let mut message_receiver = self.message_broadcast.subscribe();

// Poll for received messages...

while let Ok(message) = message_receiver.recv().await {

// ...and send them to client.

socket.send_message(message).await?;

}

Ok(())

}

Let's put all of this together in the main function. We'll make a new chat server, where we pass in our implementation of the service. We'll be serving using the Hyper HTTP transport for the server, although this can be swapped out with another transport if needed.

// ...other imports

// Import our CORS middleware

use tower_http::cors::CorsLayer;

// Import the Hyper HTTP transport for hRPC

use hrpc::server::transport::http::Hyper;

// `tokio::main` is a Rust macro that converts an `async fn`

// `main` function into a synchronous `main` function, and enables

// you to use the `tokio` async runtime. The runtime we use is the

// multithreaded runtime, which is what we want.

#[tokio::main]

async fn main() -> Result<(), BoxError> {

// Initialize the default logging in `tracing-subscriber`

// which is logging to the terminal

tracing_subscriber::fmt().init();

// Create our chat service

let service = ChatServer::new(ChatService::new());

// Create our transport that we will use to serve our service

let transport = Hyper::new("127.0.0.1:2289")?;

// Layer our transport for use with CORS.

// Since this is specific to HTTP, we use the transport's layer method.

//

// Note: A "layer" can simply be thought of as a middleware!

let transport = transport.layer(CorsLayer::permissive());

// Serve our service with our transport

transport.serve(service).await?;

Ok(())

}

Notice how in the code above, we needed to specify a CORS layer. The next step of the process, of course, is to write a frontend for this.

Frontend (CLI)

If you don't want to use the web client example, you can try the CLI client at hRPC examples repository. Keep in mind that this post doesn't cover writing a CLI client.

To run it, after you git clone the repository linked, navigate to chat/tui-client and run cargo run. Instructions also available in the READMEs in the repository.

Frontend (Vue 3 + Vite + TS)

NOTE: If you don't want to follow along, you can find the full web client example at hRPC examples repository.

The setup is a basic Vite project using the Vue template, with all of the boilerplate demo code removed. Once you have the project made, install the following packages:

npm i @protobuf-ts/runtime @protobuf-ts/runtime-rpc @harmony-dev/transport-hrpc

npm i -D @protobuf-ts/plugin @protobuf-ts/protoc windicss vite-plugin-windicss

In order to get Protobuf generation working, we'll use Buf, a tool specifically built for building protocol buffers. Start by making the following buf.gen.yaml:

version: v1

plugins:

- name: ts

out: gen

opt: generate_dependencies,long_type_string

path: ./node_modules/@protobuf-ts/plugin/bin/protoc-gen-ts

The config above invokes the code generator we installed, and enables a string representation for longs, and generating code for builtin google types too.

Now, paste the protocol from earlier into protocol/chat.proto in the root of the folder, and run buf generate ./protocol. If you see a gen folder appear, then the code generation worked! ✅

The Implementation

When building the UI, it's useful to have a live preview of our site. Run npm run dev in terminal which will start a new dev server.

The entire implementation will be done in src/App.vue, the main Vue component for the site.

For the business logic, we'll be using the new fancy and shiny Vue 3 script setup syntax. Start by defining it:

<script setup lang="ts">

</script>

Now, inside this block, we first create a chat client by passing our client configuration into the HrpcTransport constructor:

import { ChatClient } from "../gen/chat.client";

import { HrpcTransport } from "@harmony-dev/transport-hrpc";

const client = new ChatClient(

new HrpcTransport({

baseUrl: "http://127.0.0.1:2289",

insecure: true

})

);

Next, we will define a reactive list of messages, and content of the text input:

const content = ref("");

const msgs = reactive<string[]>([]);

These refs are used in the UI, and these are what we'll ultimately need to use in order to reflect a change.

Now let's add our API logic:

// when the component mounts (page loads)

onMounted(() => {

// start streaming messages

client.streamMessages({}).responses.onMessage((msg) => {

// add the message to the list

msgs.push(msg.content);

});

});

// keyboard handler for the input

const onKey = (ev: KeyboardEvent) => {

if (ev.key !== "Enter") return; // only send a message on enter

client.sendMessage({

content: content.value,

}); // send a message to the server

content.value = ""; // clear the textbox later

};

Now let's add some layouting and styling, with registered event handlers for the input and a v-for loop to display the messages:

<template>

<div class="h-100vh w-100vw bg-surface-900 flex flex-col justify-center p-3">

<div class="flex-1 p-3 flex flex-col gap-2 overflow-auto">

<p class="p-3 max-w-30ch rounded-md bg-surface-800" v-for="m in msgs" :key="m">{{ m }}</p>

</div>

<input

class="

p-2

bg-surface-700

rounded-md

focus:outline-none focus:ring-3

ring-secondary-400

mt-2

"

v-model="content"

@keydown="send"

/>

</div>

</template>

If you are unsure what these classes mean, take a look at WindiCSS to learn more.

And with that we complete our chat application!

Other Implementations

While we used Rust for server and TypeScript for client here, hRPC is cross-language. The harmony-development organisation on GitHub has other implementations, most located in the hRPC repo.

Tags: #libre #harmony